Eliminating the noise in vision technology

An artificial intelligence algorithm that uses few resources could help robotic systems find their way in poor light

The navigation of robotic systems, such as drones or self-driving cars, requires computer-based vision technology that can accurately perform, even in challenging low-light conditions such as moonlight. This capability is particularly important for applications in defense, law enforcement and space exploration.

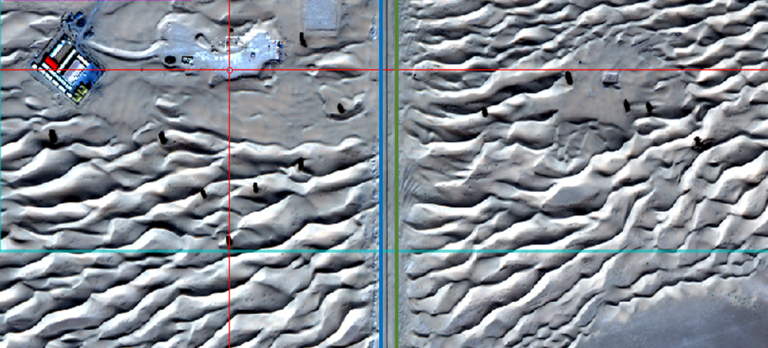

Existing technology uses neuromorphic cameras, which are equipped with sensors that mimic the functions of the human retina. Unlike conventional cameras that capture individual frames, neuromorphic cameras report changes in the scene at different time points and, by comparing this information, establish a continuous stream of events. They can operate in low-light conditions, while using extremely low amounts of power, compared with conventional cameras. However, the performance of neuromorphic cameras is typically hampered by noise.

A team led by Yahya Zweiri, Director of the Advanced Research and Innovation Center and Associate Chair of the Aerospace Engineering Department at Khalifa University, has designed and developed an artificial intelligence algorithm to filter noise from event streams while preserving data from real features in the visual scene. The algorithm is based on a graph neural network (GNN) with transformers known as GNN-transformers, and uses spatiotemporal correlations between events in the camera’s surroundings to detect if an incoming raw event is noise or a genuine feature, such as the edge of an object.

“The proposed algorithm is the fastest among state-of-the-art models owing to the processing of events efficiently on a central processing unit,” says Zweiri. “This eliminates the need for advanced hardware, making the model ideal for settings with limited computational power and resource-constrained platforms.”

The GNN-transformer incorporates an EventConv layer, which distinguishes between real activity and noise events by operating on graphs constructed from the camera’s raw data. “This captures spatiotemporal events, while accounting for the asynchronous nature of the camera stream,” says Yusra Alkendi, a PhD graduate in Aerospace Engineering and member of the team. The model has been tested on publicly available datasets to show that the algorithm works in general settings with different motion dynamics and different lighting conditions.

Alkendi appreciates the supportive research environment at KU. “KU has generously provided me with hands-on research experience using cutting-edge tools and technologies, enabling the shaping of global research and benefiting our community,” she says. “The guidance of distinguished professors has led to remarkable milestones, for example, the accolade of Top 1% Journal Publication in August 2022 and other research papers in prestigious engineering journals.”

Looking ahead, Zweiri and Alkendi are aiming to improve the performance of neuromorphic vision systems further by combining their algorithm with other event-based computer vision algorithms. These enhancements could encompass self-localization, object detection, object tracking and object recognition, among others.

Reference

1. Y. Alkendi, R. Azzam, A. Ayyad, S. Javed, L. Seneviratne and Y. Zweiri “Neuromorphic Camera Denoising Using Graph Neural Network-Driven Transformers,” in IEEE Transactions on Neural Networks and Learning Systems, 2022. | Article