The many faces of robotics

At the forefront of research into embodied intelligence, Irfan Hussain's work spans a remarkable breadth of real-life applications as he fuses physical and cognitive intelligence into wearable and field-based robotic systems.

Why did you choose to work on AI and robotics at Khalifa University?

When I first arrived at KU, I thought that I’d stay for one year and explore the opportunities. My perspective totally changed. Robotics and AI are emerging in the Middle East, and the level of interest and needs in this area is striking.

At the end of day, all robotic systems have different applications, but the fundamental components are the same. Humans rely on senses such as eyes and muscles to interact with the world. In robotics these are called actuators and if you have the ability to design them in the first place, then it’s very easy to develop them across different fields.

What’s the most fascinating aspect of your research?

Real-world applications. A lot of the research or technology that I have developed has some kind of real-world use that goes beyond the lab. These include a stroke rehabilitation device, advancing underwater robotics for coral reef conservation and creating lifelike robots for wildlife monitoring.

How can robots help with wildlife monitoring?

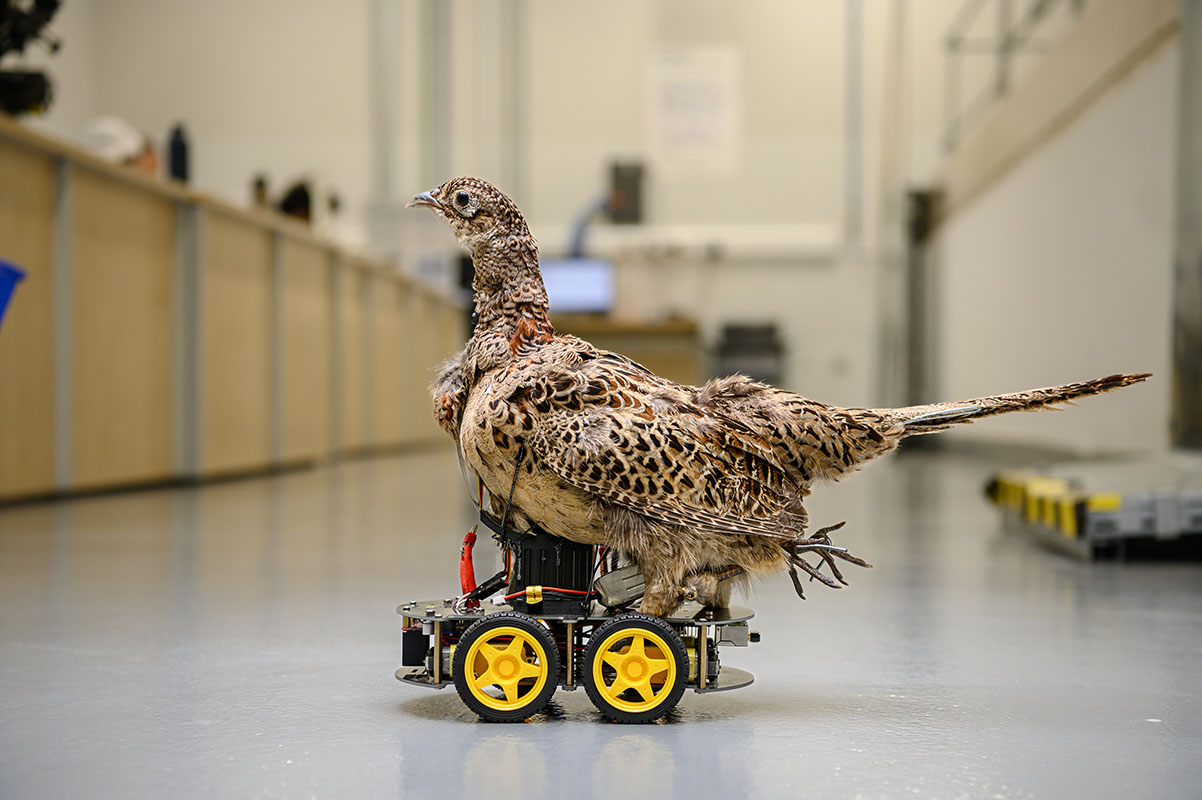

We’re building a robot that looks and acts like the Houbara bird, an important yet vulnerable species in the UAE. Our goal is for this robot to interact with natural birds, monitor their behavior, and help with conservation.

Robotics and AI are emerging in the Middle East, and the level of interest and needs in this area is striking.

Irfan Hussain

To make the robot look just like a real Houbara, we first scan different versions of the bird to create a 3D model. Then we use 3D printers to build the body, adding a lifelike skin on top, making sure its texture is a perfect match. Inside, we fit the robot with sensors, cameras, and microphones. Finally, we train the AI to see and understand the real birds, so that our robot can interact with them in a completely natural way.

Tell us about your marine robotics work.

At KU we have a unique underwater pool, one of only a few in the world that can simulate realistic ocean conditions. We use wave generators to create waves with different strengths and directions, and we have tracking systems to measure them, allowing us to test our underwater robots under real-world conditions.

This facility is crucial for performing various underwater activities. For example, we use our robots for aquaculture monitoring in extreme environments that are too dangerous for human divers. We also have projects focused on coral reef inspections. Because of environmental changes, corals are in danger of extinction, and we’re using our underwater robots and AI solutions to monitor their health.

Which application really inspires you?

There is a very simple device that I developed to help stroke patients who have lost the ability to use one side of their body, making everyday tasks such as picking something up nearly impossible. The device is a soft robotic finger that compensates the patient for what they lost and enables them to grasp objects. After using the device, one patient who had had a stroke 10 years before said it was the first time that he had used his impaired hand, and that basically, he started to feel that it belonged to his body again.